66

Подарочный бокс с ИИ

Как уже писал, у меня есть фотополимерный принтер. С его помощью я иногда воплощаю некоторые идеи. Одной из таких стала подарочная коробочка для серьг.

3 пары серьг были выполнены в виде крыльев, поэтому идея была в том, чтобы коробочку тоже сделать в какой-то такой стилистике. Опытным путем был выстроен примерно такой пайплайн: генерация промптов (chatgpt) > генерация изображений по этим промптам (локальный flux dev) > генерация 3д-модели (tripo3d).

Генерация промптов

Если вы не знаете точно чего хотите или хотите вариативности - chatGPT легко поможет сгенерировать нужные идеи текстом. DALL-E на текущий момент неконкурентноспособен, чтобы использовать его напрямую для генарации изображений, поэтому от chatGPT мы просим только текст.

Собственно я хотел коробочку в виде сердца, половинки которой бубуд являться крыльями.

Запрос:

Хочу сделать подарочную коробку для серёг (3 пары), коробочка должна быть в виде сердца, сложенного из двух крыльев, вид сверху, чтобы мне было проще ее переделать в 3д для печати.

Мне нужен промпт для flux, который сгенерирует вид сверху на эту коробочку.Ответ:

Top-down view of a heart-shaped box, where the heart is formed by two large, symmetrical wings. The wings curve inward, meeting at the center to form the pointed bottom of the heart. The wings have subtle feather-like textures, with lines running along the surface to suggest individual feathers. The surface of the wings is smooth and gently curved. The heart's edges are clean and sharp, and the center line where the wings meet is distinct but seamless. Inside the heart, visible from the top, there are three evenly spaced compartments, each designed to hold a pair of earrings. The overall design should be sleek and minimal, with clear symmetry between the wings. Soft lighting highlights the contours and textures of the wings, and the color is a soft, neutral tone such as white or light grey.На самом деле такой длинный промпт нам особо не нужен, flux за счет встроенного энкодера понимает обычный текст.

Генерация картинок

Собственно, зачем flux? Вряд ли знакомым с ИИ-контентом людям нужно объяснять, почему DALL-E не конкурентоспособен - у него свой особый не реалистичный полумультяшный стиль. Для примера этот промпт отрисованный в DALL-E:

В целом ок, но вид не сверху, генерируется по времени терпимо, но есть лимиты, при наличии локально поднятого flux, который можно оставить на несколько часов для генерации кучи вариантов большого смысла мучать chatGPT не вижу.

Заряжаем промпт во flux и просим сгенерировать 300 картинок. Почему 300? Потому что могу. 1 генерация занимает ~1 минуту времени, так что ушло на все про все порядка 5 часов.

И это чуть больше трети всех вариантов. На примере одного из проектов, о котором буду писать в отдельных статьях - это даже не предел, там я генерировал 1500 изображений, правда с куда большей вариативностью.

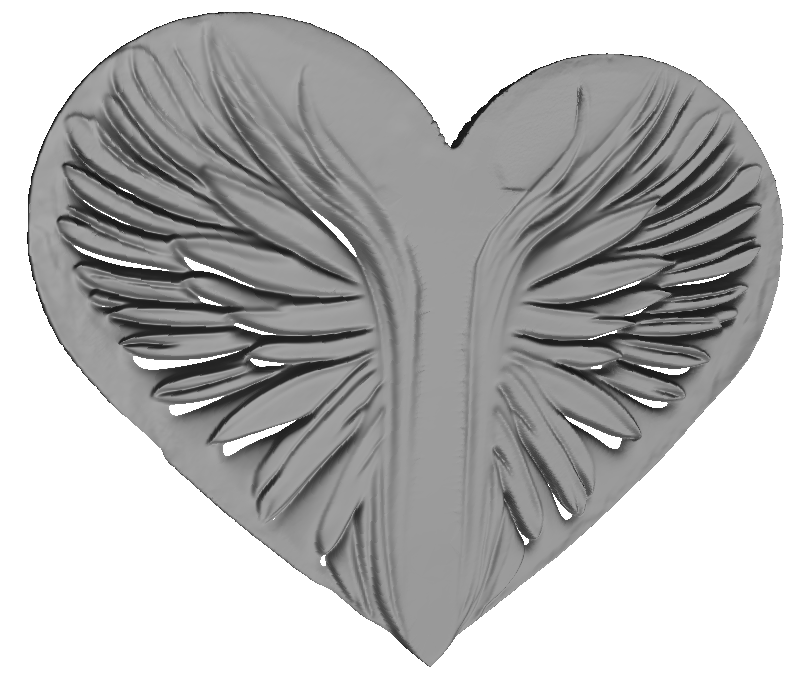

Выбрал я одну наиболее понравившуюся:

3d модель

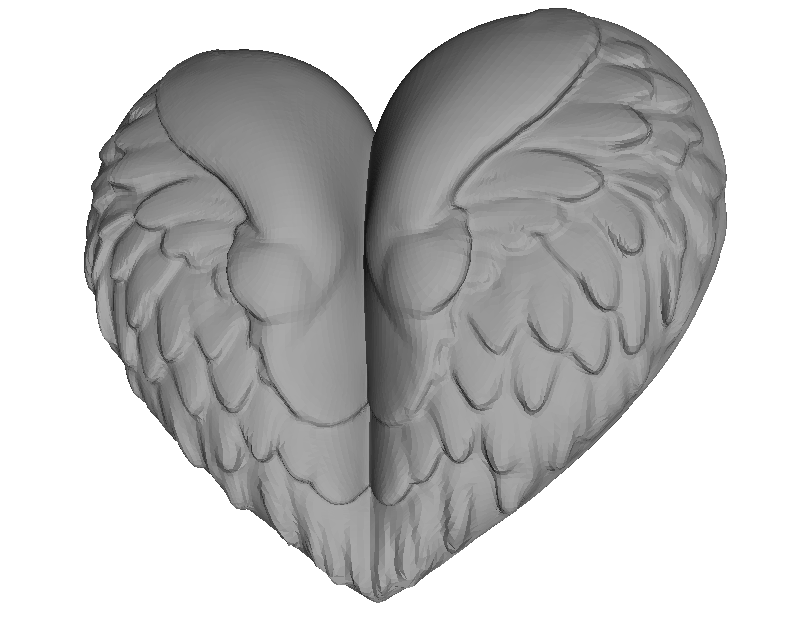

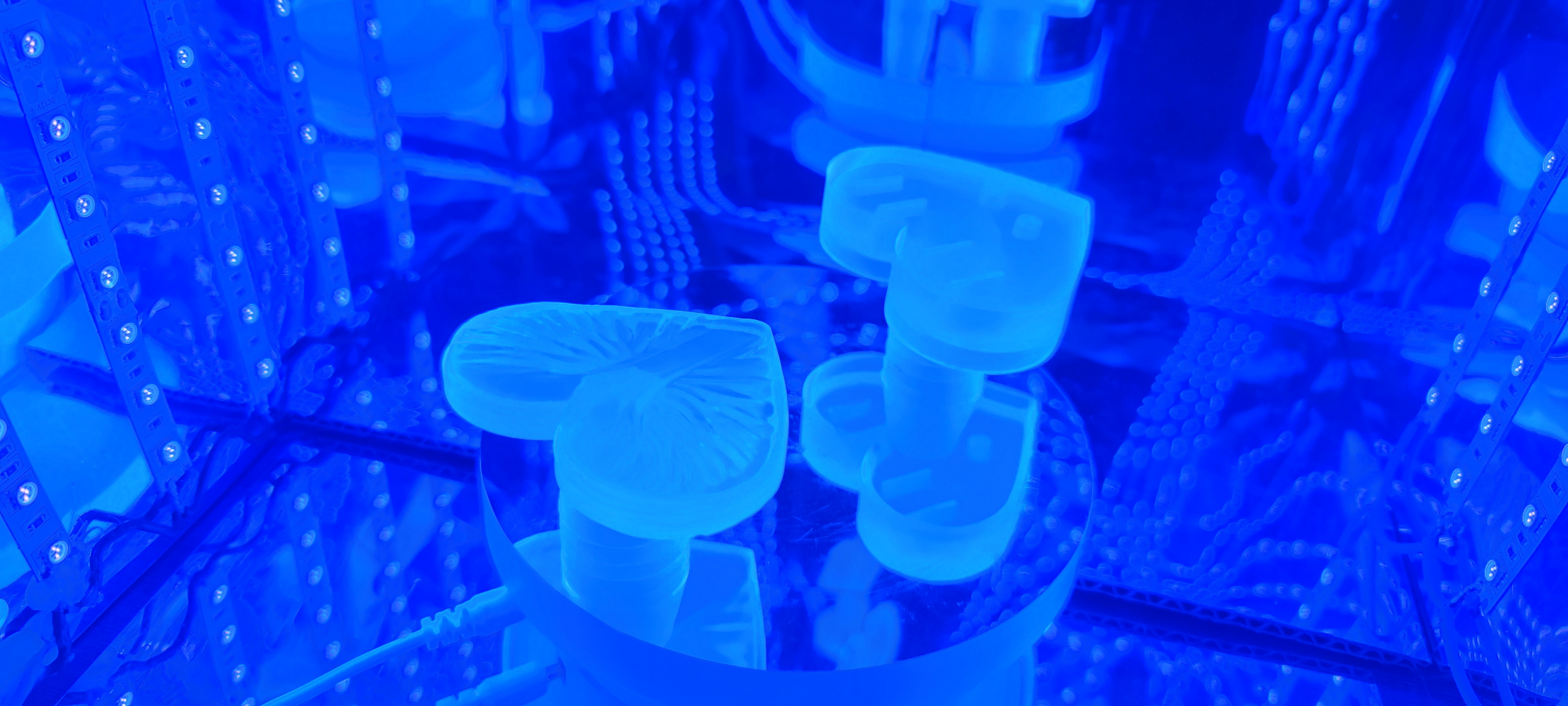

AI на момент написания статьи может помочь и здесь. tripo3d (rodin) на текущий момент, пожалуй, лучшее решение на рынке. Просто заходим на сайт, загружаем картинку и через несколько минут получаем готовую 3д-модель:

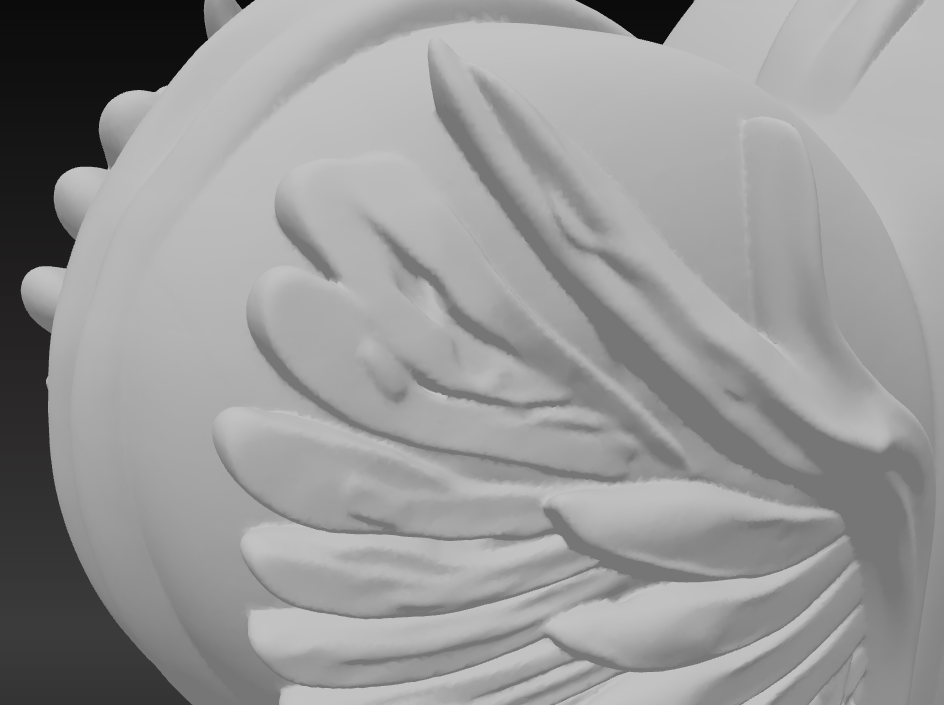

Но проблема кроется в деталях, и сейчас при большом приближении видны артефакты (они во многом являются следствием использования некачественного изображения):

И это сейчас, коробочку я делал полгода назад, когда tripo3d еще не обновился до текущей версии. Тогда это выглядело еще хуже (на примере другой картинки):

Если для fdm-принтера это может быть достаточно, то для фотополимерной печати это слишком зашумленная модель, все эти артефакты будут на ней видны.

Далее была еще попытка сгенерировать по картинке карту нормалей, чтобы скормить ее в blender, но img2img генераторы карт нормалей еще более ужасны чем img2model, лучшее что я нашел выглядело так:

Моделирование

Посему я решил обойтись пока на этом этапе без AI инструментов для моделирования. В качестве карты нормалей использовал оригинальную картинку из flux. Результат получился не совсем тем, как на 3д-рендерах, но фактура все равно вышла интересной и узнаваемой:

Далее сглаживаем шероховатости, немного ручной доводки скульптингом в blender, моделируем крышку и коробочку с вырезами под серьги в solidworks (с тех пор перешел на fusion360) таким образом, чтобы они внутри не болтались и не выпадали.

Результат

Далее печатаем, убираем поддержки и отправляем солярий:

На этом не все - шлифуем, лакируем и получаем финальный вариант:

Комментариев пока нет

-

Goback - простые бэкапы

Решил тут наконец заняться бэкапами. И без самописных утилит не обошлось. -

Проект Наблюдатель

Проект приурочен к хеллоуину - это статуя одноглазого ктулху с механизированным… -

Универсальный AI Telegram Bot

Хотите в пару действий запустить собственного AI бота для Telegram? -

Анализ истории просмотров Youtube

Задумывались, сколько времени вы проводите за просмотром видео? Давайте считать. -

Image2model с tripo3d и Blender

Иногда хочется, чтобы нарисованный или сгенерированный персонаж стал настоящим -

Локальный эмулятор Telegram

Писали ли вы когда-нибудь телеграм ботов?